The SaaS + AI GTM Playbook

In speaking with sales leaders at traditional SaaS businesses that have recently launched AI capabilities, I’ve noticed some common issues they keep running into.

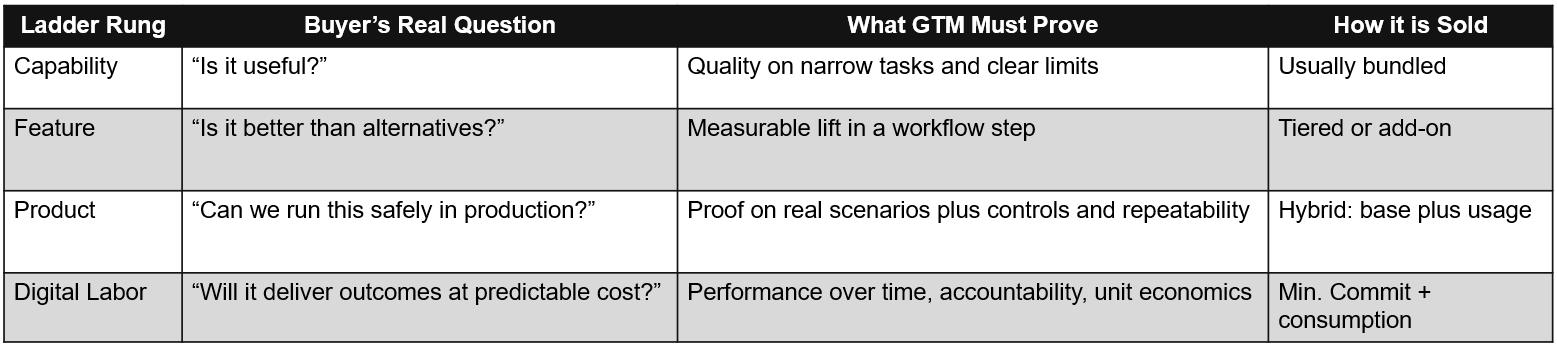

In my earlier post, How to Monetize Your AI Roadmap, I used the ladder: Capability → Feature → Product → Digital Labor. This post is the GTM companion. It addresses the common mistakes I see GTM organizations make when introducing AI SKUs into their SaaS product portfolios.

AI does not just add new features. It changes what buyers need to believe, what they need to control, how they budget, and what success looks like after go-live. If you are a traditional SaaS company adding AI (building it, acquiring it, or embedding it through a partner), this is the retrofit GTM playbook.

Foundation: Be clear on what you are selling

Before you change GTM, get two things aligned across Product, Sales, CS, and Finance: (1) what rung of the monetization ladder are you on, and (2) common pitfalls you want to deliberately design for. These two quick tools make the rest of the playbook (detailed later) easier to execute.

A simple GTM maturity matrix

Buyers change what they care about as you move up the ladder. Your proof, your controls, and your commercial model have to match the rung you are actually selling.

If your sales deck and your contract imply a higher rung than the product can reliably deliver, deals stall, and churn rises.

2. Common failures

Most AI GTM mistakes look unique in the moment. They are not. These patterns show up again and again in incumbents trying to bolt AI onto a proven SaaS motion:

You position your offering as Digital Labor, but it is a Feature. Buyers start asking for accountability, controls, and failure-handling that you cannot credibly address.

You launch broadly without clear boundaries. Users try it everywhere; it fails predictably, and the market concludes, “Your AI does not work.”

You price like SaaS seats while costs behave like compute. Adoption rises, and margins surprise, or buyers push back on unpredictable spend.

You rely on benchmarks for AI performance instead of customer scenarios. Proof looks great in the lab and collapses in real workflows.

You run CS with an adoption-only focus. Performance drifts, exceptions pile up, and renewals get held hostage to reliability.

You upgrade models without regard for what breaks downstream.

You never operationalize exception handling.

Playbook steps

Once you are aligned on where you are on the monetization layer, here are the playbook steps that help avoid the common failures highlighted above. If you only do a few, start with Steps 1, 3, 4, 5, and 6 below.

1) Name what you are actually shipping

If what you shipped is a Capability (summarize, generate, classify), GTM is mostly enablement and packaging. If it is a Feature, GTM is about differentiation and adoption inside a workflow step. If it is a Product, GTM becomes proof, governance, and expansion, as customers now rely on an AI-driven workflow. If it is Digital Labor, GTM becomes operational and financial because you are selling work performed with variable costs and measurable output.

If you do not name the rung, you will oversell. Buyers will ask Digital Labor questions while you are still shipping Feature reality.

GTM takeaway: Write a one-sentence “what we sell” statement for the rung you are in, and build the sales motion and proof around that statement.

2) Pick one end-to-end workflow, not “AI across the platform.”

The fastest path to real revenue is still narrow and specific: one workflow with a clear beginning and end where you can define what “good” looks like, what “bad” looks like, and what happens when the system is uncertain.

Many incumbents try to launch AI everywhere. What they create instead is a thousand micro-demos and zero repeatable proof.

GTM takeaway: Start with one workflow you can measure and defend, then scale that pattern to adjacent workflows and segments.

3) Small installed-base rollouts.

Your installed base is an advantage, but it also increases the cost of getting it wrong. A shaky rollout does not fail quietly. It creates support load, trust issues, and confused expectations.

A sensible rollout looks like: start with a small set of trusted customers and one workflow; expand only after wins are repeatable and failure patterns are understood; make it the default only when controls, support, and training are ready.

GTM takeaway: Use measurable gates to expand rollout, not internal excitement. Earn the right to go broad.

4) Replace “pilot” with a structured “proof run.”

A pilot is mainly about implementation and adoption. A proof test is mainly about performance and trust. Enterprise buyers already know how to run pilots. With AI, the real question is different: when can we trust this enough to put it into day-to-day operations?

So, the pilot needs to look like a proof test: use real customer cases, agree up front on what “good enough” means, score the results, and track where it fails and why (not just the successes).

A “pass bar” is simply that shared definition of success. Example: “We will test 50 real cases. This passes if it hits at least X percent acceptance, stays under Y seconds, and anything uncertain is flagged for human review.”

ServiceNow's AI agents deploy with scenario packs that test deflection quality before rollout.

GTM takeaway: Productize a repeatable proof kit, including a set of scenarios, pass bars, scorecards, and a simple failure taxonomy to allow efficient iterations between GTM and product.

5) Evolve KPIs you track weekly.

Once AI is in the product, the usual SaaS dashboards start lying to you. ARR can look great while the workflow fails in the wild. Adoption can look high while humans quietly clean up the mess. Usage can spike even as margins worsen.

So track four operating numbers that answer one question: is this working at scale, at a cost we can live with?

Time to first successful outcome (by segment): how long it takes a customer to get a real win, not just log in.

Top failure reasons: why it fails, categorized consistently so you can fix the right things.

Repeatability by segment: whether the same workflow keeps working across customers and contexts.

Cost per successful outcome: what it costs to deliver a completed result, not cost per interaction.

GTM takeaway: Use these four numbers as rollout gates. Scale only when outcomes are repeatable and unit economics are understood.

6) Make safety rails part of the product.

When buyers slow down, it is usually not because they doubt the upside. It is because they do not know where the AI will go off the rails.

The deal-unblockers are boring but decisive: controls (who can do what), logs (what happened and why), data limits (what it can access), fail-safes (what happens when it is unsure), and do-not-use zones (where you do not recommend it). Buyers often approve deals based on what they see in this list, not the core demo.

GTM takeaway: Show boundaries and controls early in the sales cycle, and invest in making them real product capabilities, not promises.

7) Update the commercial model for variable cost and “work performed.”

In classic SaaS, you pay for access. Seats and tiers work because costs are mostly fixed, and usage usually improves margins.

AI changes that. More usage can mean more cost. That is why the market is shifting toward credits, consumption, and bounded usage that map to work performed rather than just software access.

Salesforce's Agentforce pricing publishes credit consumption per action type, giving procurement clear spend forecasting.

The GTM lesson is not “switch to usage.” It is to make it easy for a buyer to answer three questions: what drives spend, how predictable is it, and what controls prevent surprise bills.

GTM takeaway: Repackage AI with spend drivers and controls that Finance can understand and Procurement can approve.

8) Change the funnel: demo → proof → controls → scale

In traditional SaaS, the flow is usually demo → roadmap → close. With AI, the flow becomes demo → proof → controls → scale because buyers need evidence and guardrails before they operationalize it.

A clear pass bar is what keeps “proof” from turning into vibes. Agree up front on success thresholds (quality, latency, human review rate) and do not scale until you hit them on real cases.

GTM takeaway: Lead with proof and controls earlier. Make the sales motion about operational trust, not feature persuasion.

9) Update sales compensation for usage and outcomes.

In classic SaaS, comp is straightforward: close committed ARR, collect commission. In AI with usage or outcome-based pricing, a signed contract can still disappoint if activation and ramp stall.

A workable pattern is to pay on committed minimums (or a base platform fee), then true-up commissions on realized usage quarterly. Add activation milestones to keep reps engaged after signature. Protect margins by tying variable payouts to profitable usage, not raw usage.

GTM takeaway: Commission the close, but also commission activation and profitable ramp. Do not pay people to create unbounded, unprofitable usage.

10) Evolve Customer Success from adoption to performance management.

Unlike traditional SaaS, with AI, “adoption” is not enough. Post-go-live, the question is: is the system performing, and is performance stable?

Intercom is unusually explicit here: it provides a performance dashboard for its Fin agent with metrics like involvement rate and resolution rate, and it publishes definitions for those metrics.

That is the mental shift incumbents need: CS needs to run a cadence like:

weekly failure review,

monthly performance report by segment and scenario,

ongoing content, prompt, and routing optimization,

and escalation policy tuning.

This also means Customer Success can’t be only traditional CS roles. Keeping an AI workflow running over time requires more technical skills: monitoring performance, spotting drift, retesting changes, and tuning prompts or models. Trustworthy AI isn’t a one-time launch decision; it has to be tested and monitored continuously.

Intercom also built a dedicated AI quality team after discovering traditional CS couldn't debug Fin's probabilistic failures.

GTM takeaway: Stand up a small AI Ops capability and make CS accountable for outcomes in production, not just onboarding and renewals.

11) Plan for model evolution, or you will break customers.

When the underlying model changes, behavior can change. That can break workflows, invalidate proof results, and reset trust. If you embed OpenAI and they deprecate the model with 90 days’ notice, what happens to customers who built SOPs around specific behavior?

So, model evolution needs a GTM answer: version pinning where feasible, regression tests on customer scenarios, release notes written in workflow terms, and an explicit policy for when proof must be re-run.

GTM takeaway: Treat model upgrades like breaking changes. Re-test the workflows customers rely on before you ship updates into production.

12) Do not let GTM be the bottleneck.

In some categories, AI capabilities became table stakes within 12 months (developer tools, customer support). Speed in making AI deployable beats speed in adding AI features. The trap is moving fast in product while GTM is stuck in slow, bespoke pilots and late-stage trust conversations.

GTM takeaway: Scale a repeatable proof-and-trust motion so you can move quickly without breaking customers.

Playbook Checklist

Another way to make sure you are truly ready to scale AI into your GTM is to have clean answers to these questions:

1. What rung of the monetization ladder are we selling, in plain language?

2. What is the single workflow we can prove end-to-end?

3. What is the right set of customers to start testing with?

4. Do we have a “scenario pack” and “pass bar”? Can sales run it repeatedly?

5. Do we track failures as rigorously as wins?

6. Can we show controls, logs, and data boundaries in the demo?

7. Do we have spending controls and a way to forecast consumption?

8. What happens when confidence is low?

9. How do we prevent “human shadow work” from hiding true cost?

10. Do we have an AI Ops cadence post go-live (weekly, monthly) as part of our CS function?

11. What happens when the model changes or is deprecated?

12. Does sales comp reward activation and profitable ramp up, not just initial signatures?

If you’re building, buying, or operating in this space, I’d love to compare notes.

You can reach me at faraaz@inorganicedge.com or on LinkedIn.