AI-Ready M&A: How Acquirers Should Evaluate AI Compatibility

One of the things I enjoy most about my role is the chance to meet great companies every week. Smart founders, sharp product teams, and category-defining ideas. Over the past few years, that’s meant spending a lot of time with emerging AI players in the CX ecosystem. Some are reshaping workflows. Some are rethinking how decisioning should work. And a few are building things that feel like the early signs of a new architecture.

Talking to so many teams is a privilege, but it also forces you to stay sharp. You have to keep learning. You have to stay curious. You also have to become more discerning about what makes a company a good M&A or partnership candidate. The pace of change and the sheer number of companies showing up can be overwhelming if you don’t have a way to organize your thinking.

I’ve attempted to simplify the problem by developing a practical framework to assess whether an AI product could scale inside a larger SaaS platform. And, the exact same factors matter internally, because the requirements for building AI and absorbing AI are identical at the foundational level.

This post is my attempt at consolidating my thoughts in one place. It’s not perfect, and I’m sure others will see things I’ve missed. However, I hope it gives operators and acquirers a useful starting point. A quick note: I’ve been focused on the CX space for the past decade, and this is the frame from which I have approached this post. However, the concepts I include are equally applicable to any other industry.

Why AI-Readiness Matters More Than the Demo

Most AI vendors can produce a great demo. The real question is whether their underlying product, data, and engineering foundations can support AI at scale inside a larger platform.

If they can’t, you don’t just inherit a technology gap, you inherit:

slow integration

brittle data pipelines

limited workflow attachment

expensive rework

and a roadmap that won’t deliver what was promised

AI-readiness isn’t about clever prompts or flashy UX.

It’s about whether the company has built the foundations needed for durable value.

Across dozens of conversations with teams in CX and adjacent markets, nine things consistently separate “demo-ready” companies from “AI-ready” ones:

Data

Workflows

Decisioning

Engineering

Business Model

GTM

Security & Governance

Unit Economics of Inference

Culture

Below is how I now think about each of them, in plain language, with a short table for the key checks. A quick note before we dive in: the checklists I share here are condensed versions that focus on the items I felt were most important. For those interested, please reach out to me at my email or on LinkedIn, and I’d be happy to share the comprehensive versions.

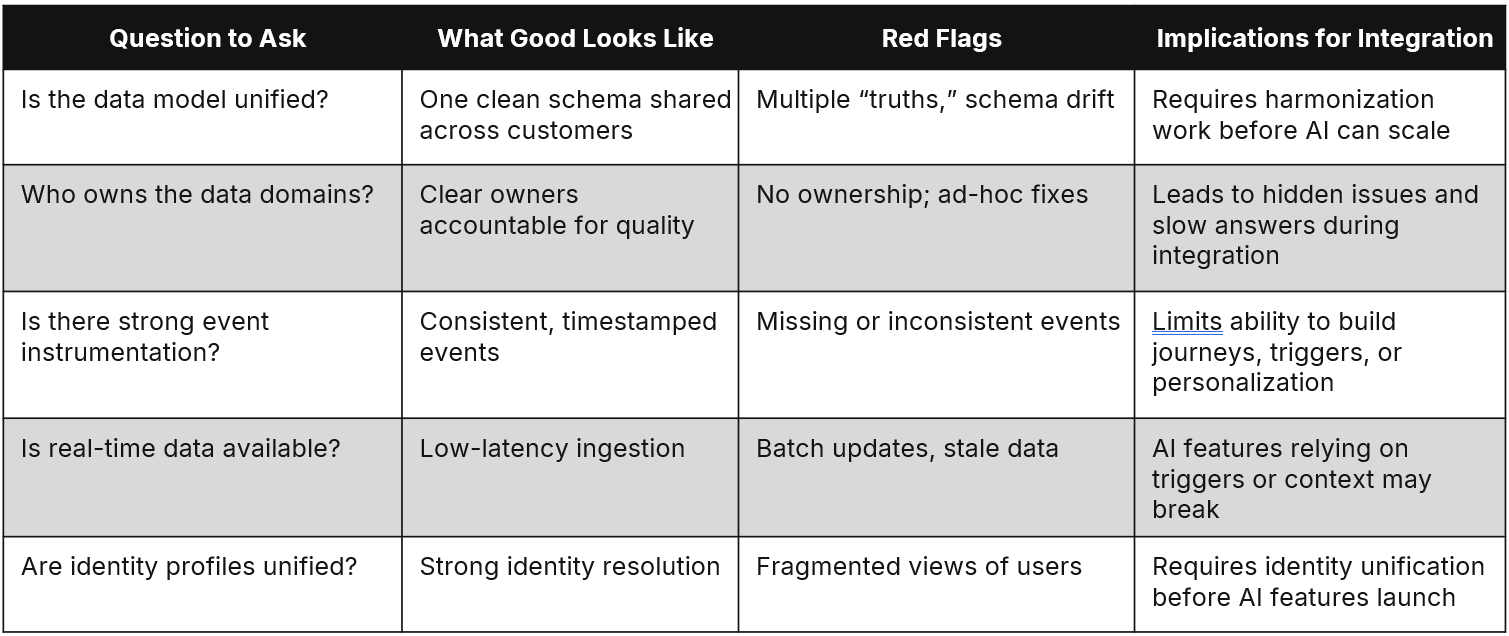

1. Data: The Foundation That Makes or Breaks AI

AI is only as good as the data it sits on. Clean, unified, well-structured data is the strongest predictor that an AI roadmap is real. In CX, where journeys, personalization, and messaging depend on real-time context, data quality directly determines output quality.

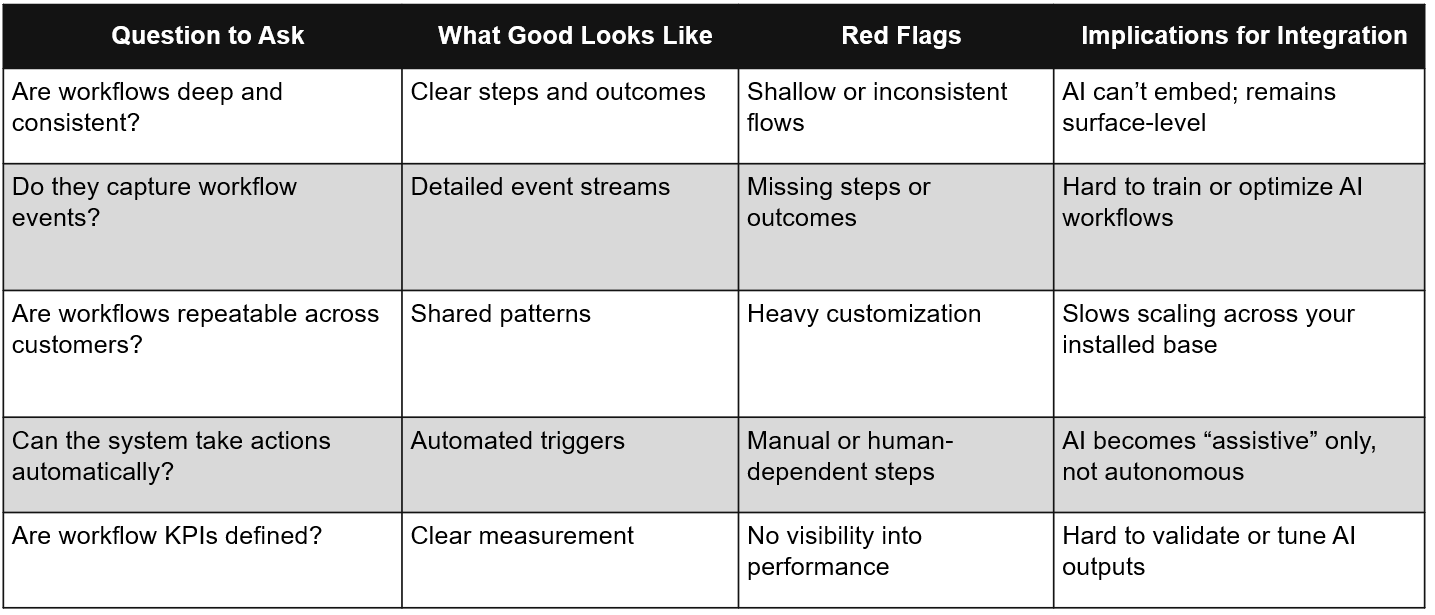

2. Workflows: Where AI Actually Creates Leverage

AI delivers value when it attaches to meaningful workflows. The deeper and more repeatable the workflow, the more AI can automate, optimize, or guide it. In CX, this includes agent guidance, journey orchestration, and conversational flows. Without real workflows, AI becomes a clever trick, not a strategic advantage.

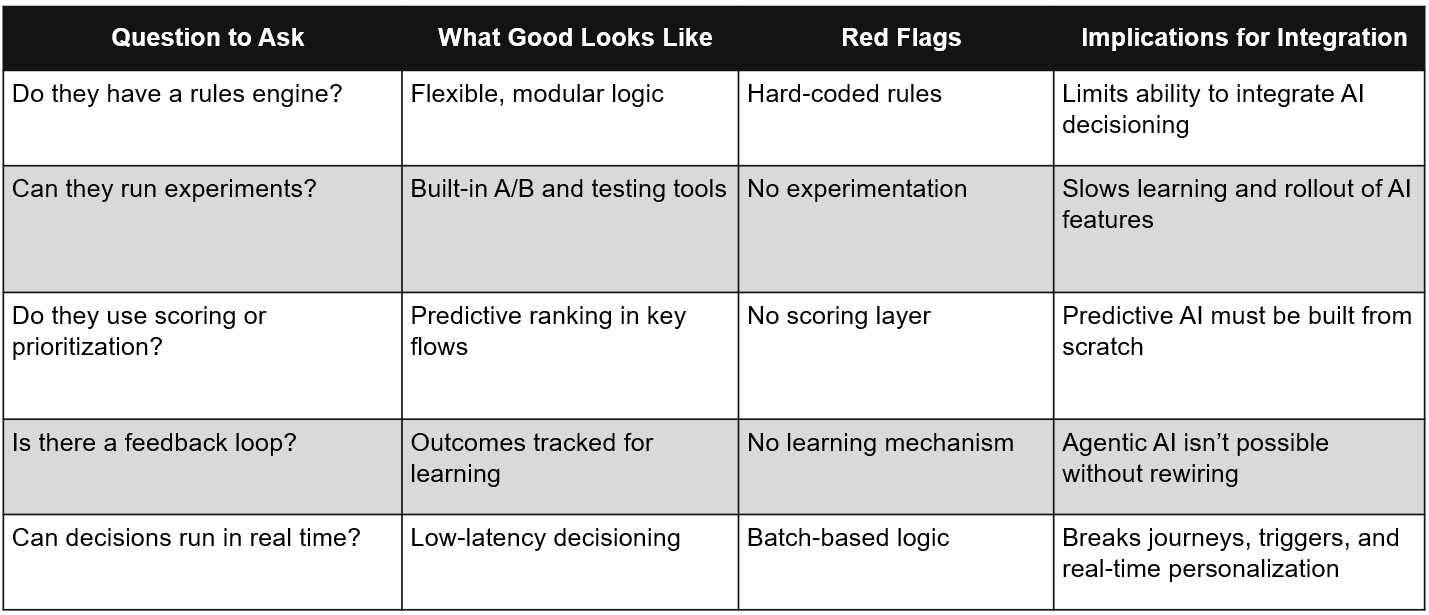

3. Decisioning: The Control Layer for AI-Native Products

AI doesn’t live in isolation. It needs a decision layer that determines when to act, what to recommend, and how to learn. Rules engines, experimentation harnesses, and scoring systems are the operating systems AI depends on. They give the model the feedback it needs to improve with each iteration. Think of them as mechanisms for feedback to the model so it can iterate and learn. Kind of like all the feedback a child uses to learn to ride a bike by quickly steering, adjusting, and trying again.

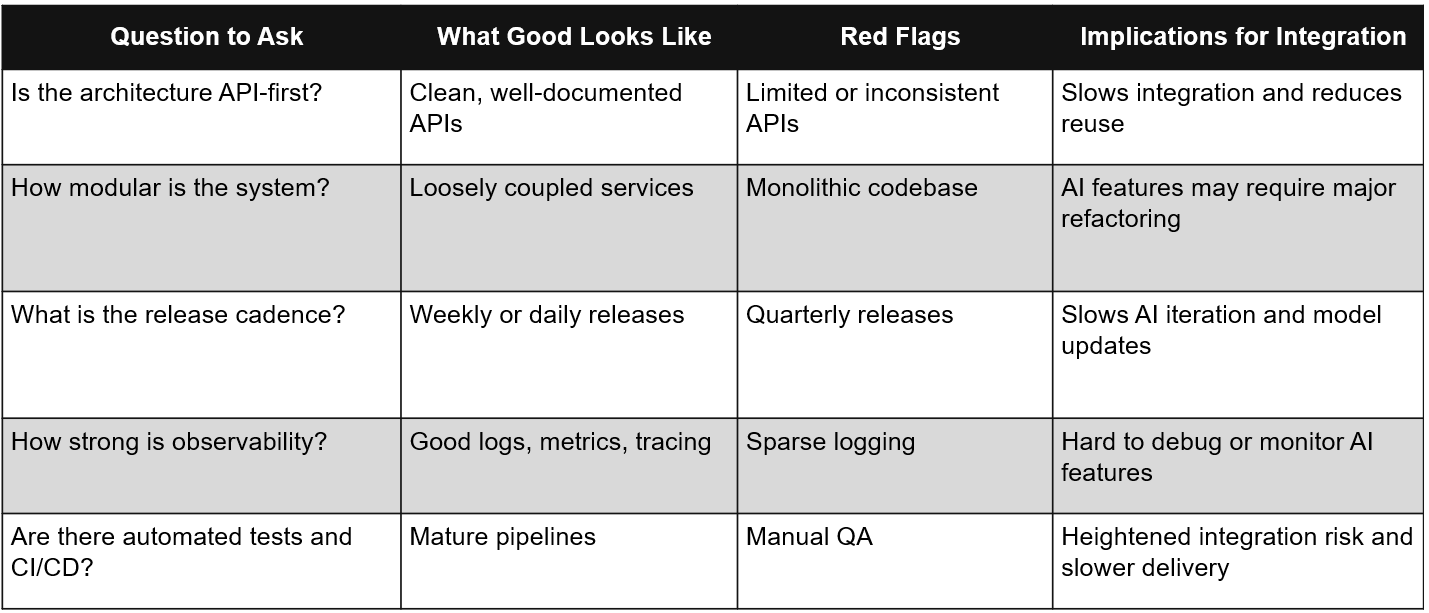

4. Engineering: Whether the Product Can Sustain AI

You can fake an AI demo with a clever wrapper.

You can’t fake the engineering needed to scale it.

AI requires reliable pipelines, observability, APIs, and fast release cycles. Without them, an AI model can’t get fresh data, connect to real workflows, or improve quickly enough to deliver meaningful value. Engineering maturity often becomes the single biggest predictor of whether a tuck-in actually accelerates a roadmap, or becomes a months-long rewrite. Similarly, it is a really critical factor to determine whether a SaaS platform is really ready to take advantage of AI.

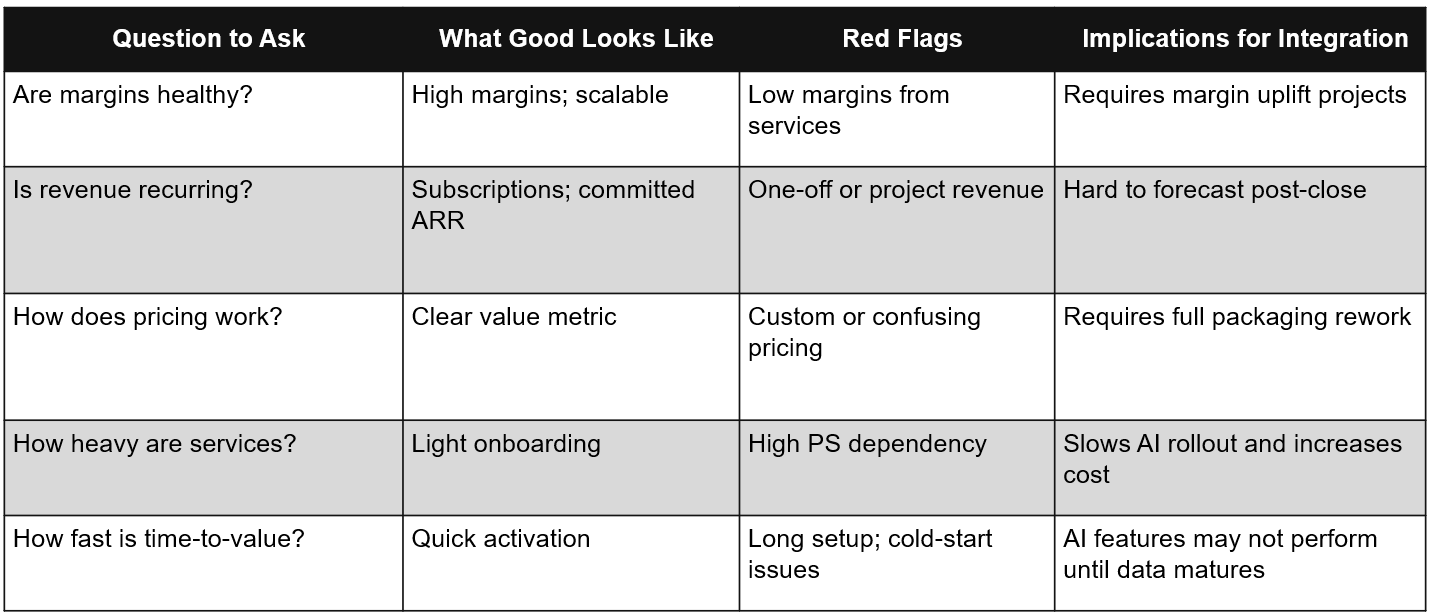

5. Business Model: Whether the Economics Can Support AI

A strong AI product with weak economics won’t scale. In fact, weak economies are often the symptom of deeper underlying problems. AI actually amplifies weak economics, especially if onboarding is long or data-heavy. Gross margin, recurring revenue, pricing, services mix, and time-to-value all determine whether an AI tuck-in becomes a meaningful contributor.

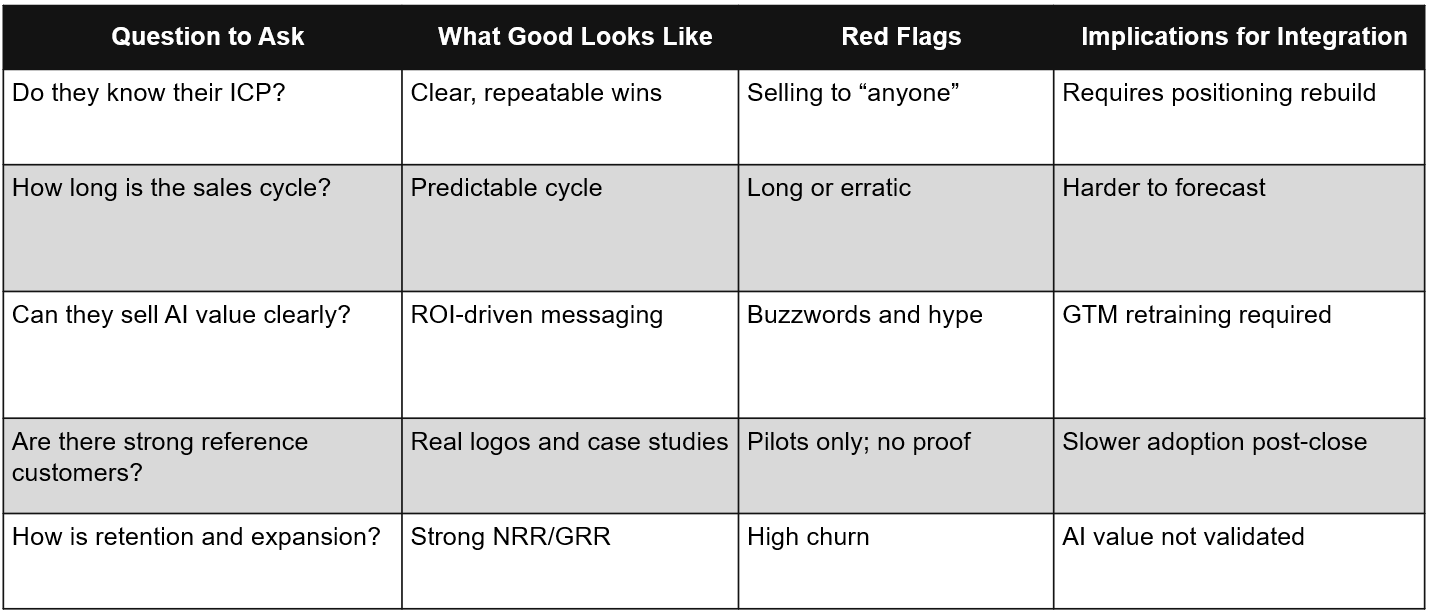

6. GTM: Whether the Vendor Can Sell and Scale AI Value

The best AI features in the world won’t help if the company can’t sell the value. ICP clarity, sales cycles, retention, and reference customers matter more in AI than in traditional SaaS. If GTM is shaky, the acquirer has to rebuild everything from positioning to proof points.

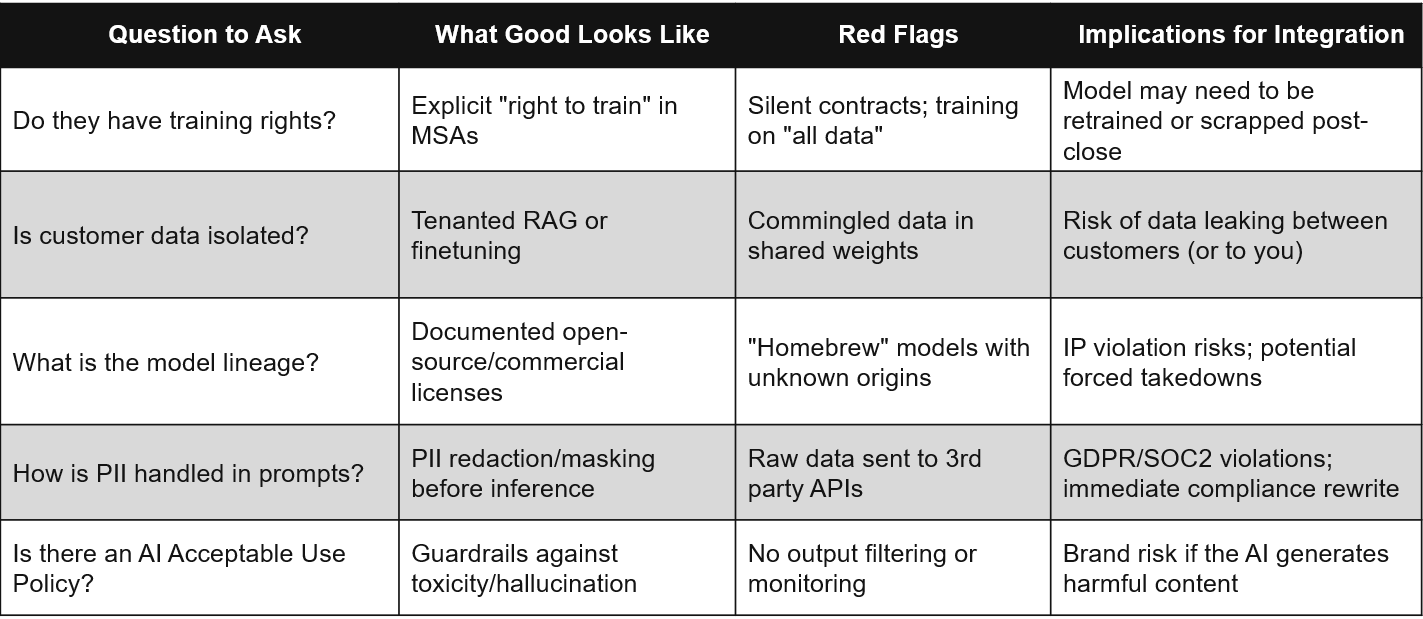

7. Security & Governance: The Hidden Liability Layer

In traditional SaaS, security diligence focuses on encryption and access control. In AI M&A, the risks are legal and existential. If a company has trained its proprietary models on customer data without explicit consent, or if it is leaking IP into public models via an API, the asset you are buying might be legally toxic. You aren't just buying code; you are buying their data lineage and liability.

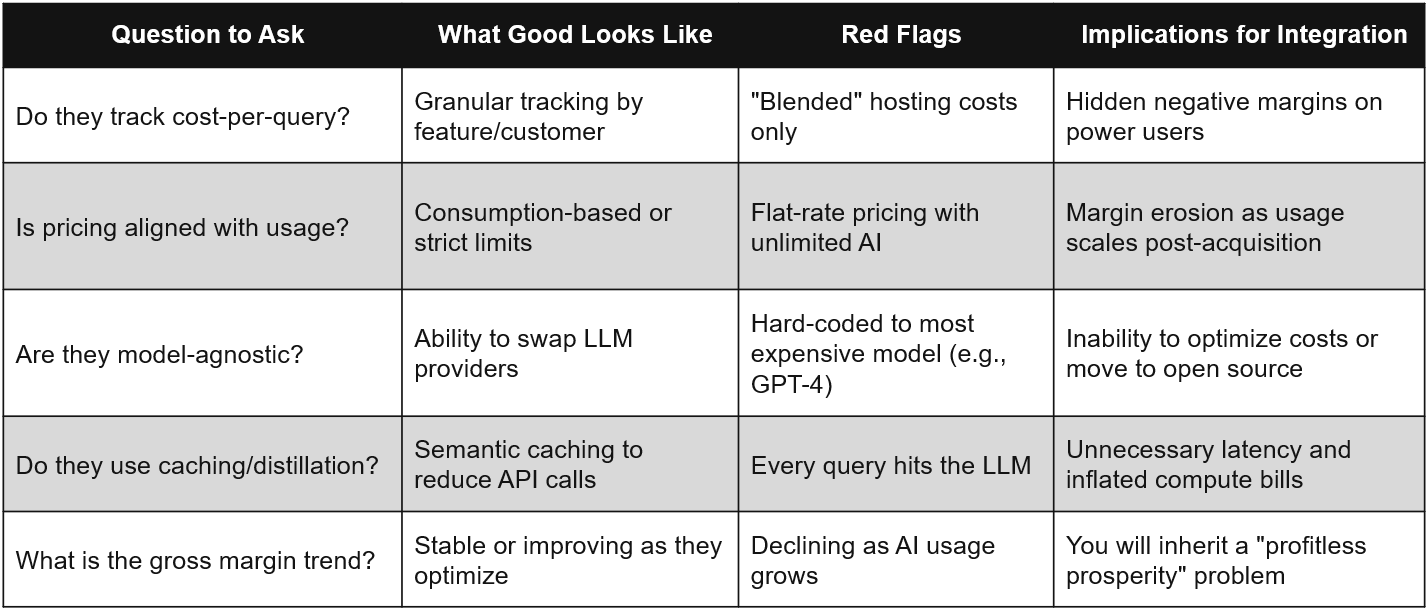

8. Unit Economics of Inference: The Silent Margin Killer

AI introduces a variable cost structure that traditional SaaS doesn't have: inference. A company might show strong growth, but if their feature usage costs scale linearly (or exponentially) while their pricing remains flat, they are bleeding margin with every query. "AI-ready" means the product delivers intelligence profitably, not just impressively.

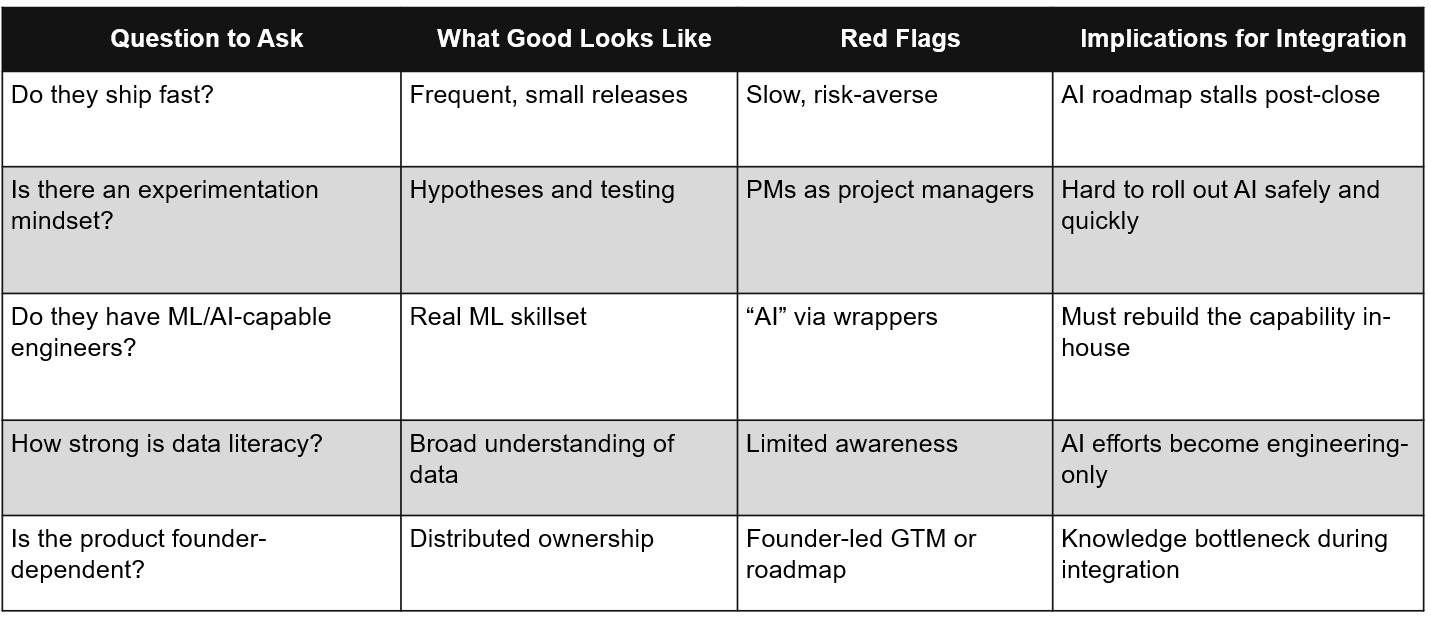

9. Culture & Talent: The Leading Indicator of Future Velocity

In every AI-driven company I’ve met, the real difference isn’t the model, but the mindset. Teams that move fast, experiment, and understand data will naturally find AI leverage. Teams that operate in slow, hierarchical ways rarely do. Culture is a leading indicator of feature velocity and integration success.

Closing Thoughts

AI-readiness isn’t a feature checklist. It’s a reflection of how well a company has built its foundations: data, workflows, decisioning, engineering, culture, business model, and GTM. When these layers are strong, AI accelerates everything. When they’re weak, AI exposes every crack.

Although this framework is designed for evaluating tuck-ins and AI partnerships, it’s just as useful as an internal health check. The same foundations determine whether your own SaaS platform is ready to absorb AI, scale it, and convert it into a product advantage.

If you’re evaluating tuck-ins or want the full expanded AI-readiness diligence framework, including deeper technical checks, integration flags, and evaluation templates, feel free to reach out to me.

If you’re building, buying, or operating in this space, I’d love to compare notes.

You can reach me at faraaz@inorganicedge.com or on LinkedIn.